As artificial intelligence is advancing at lightning speed, infusing our lives more rapidly and disruptively than any other technology in human history, everyone is asking or having to answer the question “Where do you stand on the issue of AI?”

By now, most of us have found a way to tackle the moment when the conversation takes us there. Depending on who and where we are, we will offer an emphatic “for” or “against”, or we will be comfortable enough with a vague “I don’t know, I’m not sure”.

Even if a determined answer certainly makes us appear more knowledgeable, informed and competent, and resolves the intellectual discomfort of ambivalence, I propose that a degree of hesitation can be a sign of movement towards more authenticity.

The reason I think ambivalence is a good step forward is because our attitude towards AI and for that matter, towards anything new, depends on our assessment of its benefits and dangers. “Do I trust AI to help and not hurt me and everyone I care about?” This is the actual question we are asking ourselves, and the answer we are waiting for. But such an answer cannot be easily given.

The Science of Trustworthy AI

Research suggests that most of us don’t trust AI technology [2], and our distrust is threefold [3, 4]:

- we do not trust the humans and institutions that develop and handle the technology

- we do not trust the governments that create regulatory frameworks

- we do not trust the AI processes and outputs

Trusting AI means trusting that a diffuse blend of people, institutions and technology will be on our side. And here is what scientists have concluded that we need, in order to trust [3]:

- For us to trust people, they need to be competent, predictable, benevolent and have integrity.

- For us to trust governing institutions, they need to demonstrate competence, reliability, transparency, and accountability, allow democratic participation, and have effective results leading to general welfare and justice.

- For us to trust AI systems, we need them to be reliable, robust, safe, interpretable, explainable, fair, transparent, and accountable.

Interestingly, studies show that while AI systems mature and settle into our daily life, our trust in the technology has increased, but our trust in the people and institutions has not. [4]

This means that it is not primarily the machines we distrust, but the humans.

The Problem With Putting Your Trust In Humans

The problem we face when it comes to trusting that people will help and not hurt us is that the way we see it, it is about them. They have to be and behave in a certain way for us to trust them.

This obviously means that we find ourselves in an era of unprecedented change, where unless we are personally in charge of the development and regulation of AI technology, or unless we believe that we can in some way coerce people and institutions to be trustworthy, we have little to no say about the huge potential benefits and the corresponding potential dangers for us personally and the entire human civilization.

This frustrating and depressing problem of trust is, according to the research, pretty natural and most people share it. But is there another way?

While our chances of arriving at trust in people and technology may seem bleak, there’s an alternative way to approach the issue that draws from both cutting-edge science and ancient wisdom.

A New Perspective On Absolutely Everything

It’s understandable that we dread being a powerless drop in a relentless river of threatening events driven by the heartless few at the steering wheel, by an algorithm devoid of humanity, or by an indifferent, distant God.

But I would like to propose that we turn to both modern science and timeless spiritual insights to question this fear and the entire paradigm it is based on.

In a recent presentation, Dr. Deepak Chopra, who has been a nondualism advocate for decades, describes the most recent advances in scientific research regarding the nature of reality. He refers to studies which turn our perception-based perspective of reality completely on its head. [1]

It seems that local realism, meaning the traditional belief that an object can only be influenced by its immediate surroundings, is being replaced in science by nonlocal realism, which recognizes the quantum entanglement of particles, constantly influenced through correlation, regardless of time or space [5].

This means that we live in a universe where objects are not fundamentally separate and don’t have intrinsic properties. From any distance in space or time, our measurement influences the properties that manifest as an object.

In other words, nonlocal realism, the bold emerging scientific view of reality, tells us what spiritual traditions have said all along: that we are non-locally entangled with each other and with everything in existence, and that what we intend to measure determines the properties of that which we observe, at any distance. What we observe becomes exactly what we are looking for.

Asking the Right Question

It is good, I think, to remain ambivalent regarding the issue of AI for as long as it takes for us to realize that the actual question is about our own agency, and not about the technology.

The obvious and surprising way out of fear and confusion is to take both cutting-edge science and timeless wisdom seriously, and be willing to accept their mind-boggling message. We are not powerless drops in an overwhelming flood of events. We are the mighty flow itself. This flow directs itself through conscious, intentional observation. We influence everything, all the time, not only through our actions, but also through our focus and intention.

The AI question we need to stop asking is one that places our agency outside ourselves. Instead of debating whether we’re ‘for‘ or ‘against‘ AI, we should focus on our intentions and the reality we wish to create. By shifting our perspective from powerless observers to active participants in shaping our world, we can approach AI and other technological advancements with confidence, creativity, and a sense of shared responsibility. This paradigm shift not only empowers us individually but also has the potential to guide the development and implementation of AI in ways that truly benefit humanity.

Let’s ask ourselves and each other “Who do you want to become, and how do you intend the world to be?” Let’s give each other our deepest, most beautiful answer, with confidence and trust, knowing that this answer will immediately shape you, me, the AI, and our entire world.

Sources:

[1] Chopra, D. (2024). [Presentation on nonlocal realism and consciousness]. https://www.youtube.com/watch?feature=shared&v=x2r2jVfIbIg.

[2] Ipsos. (2024). AI Monitor 2024 [Research report]. https://resources.ipsos.com/rs/297-CXJ-795/images/Ipsos-AI-Monitor-2024.pdf.

[3] Nature. (2024a). Trust, trustworthiness and AI governance [Scientific article]. https://www.nature.com/articles/s41598-024-71761-0.

[4] Nature. (2024b). Trust in AI: progress, challenges, and future directions [Scientific article]. https://www.nature.com/articles/s41599-024-04044-8.

[5] Scientific American. (2024). The universe is not locally real: Quantum entanglement insights [Article]. https://www.scientificamerican.com/article/the-universe-is-not-locally-real-and-the-physics-nobel-prize-winners-proved-it/.

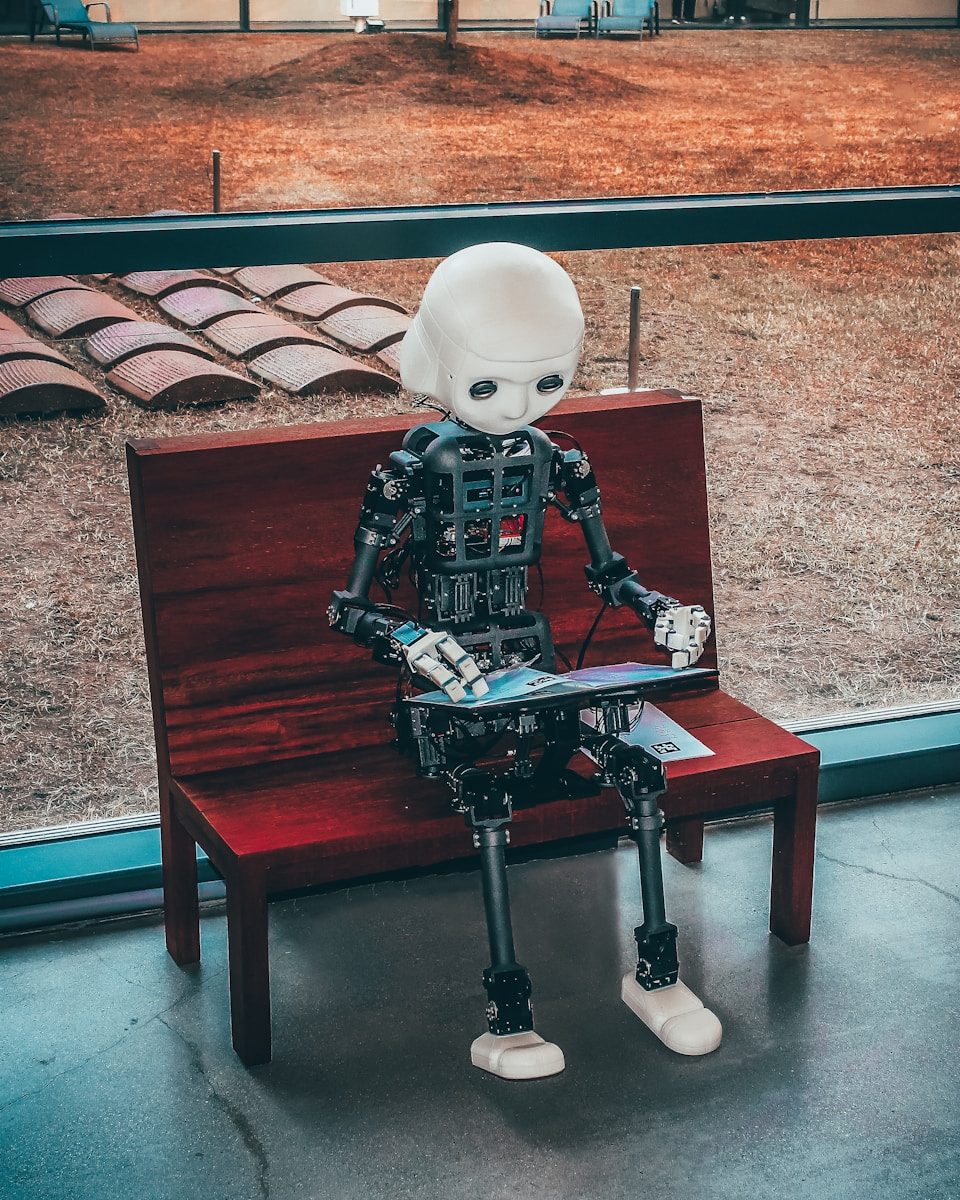

Image by Harpreet Batish from Pixabay

Aurora Carlson is an Ayurvedic counselor, meditation teacher, social worker, linguist, and the Chopra Foundation regional advisor for Sweden. Visit her on: auroracarlson.com.

Aurora Carlson is an Ayurvedic counselor, meditation teacher, social worker, linguist, and the Chopra Foundation regional advisor for Sweden. Visit her on: auroracarlson.com.